Data Schema Embedded Best Practices

Follow these best practices when structuring your transactional data.

Overview

This topic covers best practices and scenarios specific to Embedded Partners.

In general, transactional data is required when working with Embedded Partners. Transactional data consists of individual records detailing specific people or business transactions, such as starts, exits, compensation changes, or changes to any other objects in your data. These entries include timestamps, identifiers for involved entities, and other attributes that describe the entity.

Transactional data is required because it is efficient to process since there is less data being loaded. Rather than sending a full history on every upload we only need to load those records which have changed, or the latest transactions.

You can take the following approaches to structure your data:

- Transactional Profile: Attributes are provided as separate columns.

- Transactional Log: Attributes are provided in attribute/value pairs.

|

Format |

Structure Example |

|---|---|

|

Transactional Profile |

EmployeeID | JobName | EmailAddress | ... |

|

Transactional Log |

EmployeeID | Attribute | Value |

Each format has advantages and disadvantages and you should consider them carefully before adoption. A combination of both can be used if the use case makes sense.

When working with transactional data, it is important to be aware that it supports additions and updates. Deleting data usually involves creative methods such as auxiliary mappings and rules, or excluding files and restating full history. For more information about restatements, see Correct File Errors With Restatement or speak with an implementation specialist to discuss corrections workflows that will suit your needs.

Common fields

The following attributes are always required for each source:

- TenantCode: The unique tenant code for the data being provided. The tenant code will depend on whether data is being sent to the Administrating tenant or Analytic tenant. For example, WFF_e2b3d versus WFF_e2b3d~1234. The tenant code is important for several reasons, one of which is making it easier to identify which tenant a row of data belongs to. Additionally, the tenant code informs the Visier platform which Analytic tenant the data should be seeded to. This also ensures data ends up in the right tenant, reducing the risk of data leakage.

- LoadTimestamp: A timestamp with at least seconds precision is included as a column to support incremental / delta loads. For data connectors, this date and time will be referenced during the extract and will determine which records should be pulled. The format to adhere to is: yyyy-MM-dd HH:mm:ss, for example 2022-04-30 13:02:59.

- EffectiveDate: The date in which the attribute value is valid from. For example, a Job_Name attribute changing from ‘Consultant’ to ‘Senior Consultant’ on 1st April 2022, the EffectiveDate would be ‘2022-04-01 13:00:30’ (including time precision) for the Senior Consultant row.

- Action: An identifier to determine what action should be taken for the row of data. A value of A dictates that a corrected value should be inserted. A value of D dictates that a record should be deleted. A value of I is used if a correction on a previous D value is required, applicable to Event data only, nor Profiles.

Transactional Profile

Transactional Profiles must have the following requirements:

-

Each subject ID should have a dedicated row for every effective date.

-

Represent attributes as columns.

-

Prefer camel case for attribute names; underscores are also acceptable. For example, JobName.

-

Include a full historical record for all subject IDs in the first file and for restatements.

-

Subsequent data loads should only include records that have undergone changes.

-

Include all common fields as outlined previously in this document.

This approach requires the data to be provided in “wide” format, whereby a single row is provided per Subject ID along with attributes as columns.

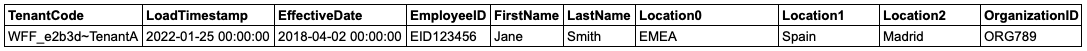

The following image shows an example of a single record in an Employee file/table. Each attribute is represented as a column with an EffectiveDate denoting when the attribute value is “valid from”. The LoadTimestamp is the datetime in which the data was extracted from your source system. This date is relied on more heavily in Data Connectors versus SFTP and flat files but please include it for both data transfer methods.

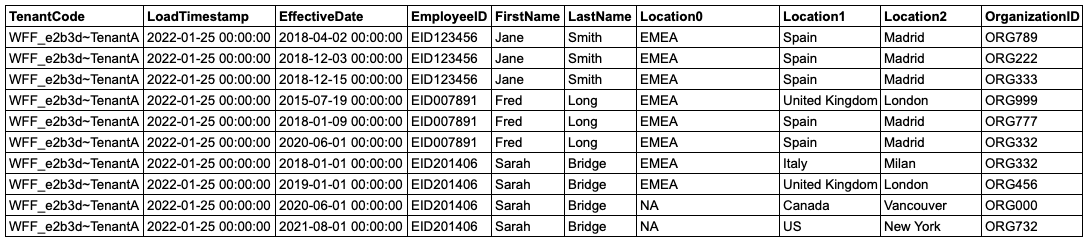

An initial full history file should be sent. The following image shows an example of a full history for all employees (assuming there are only 3 in the organization).

When a net-new change or correction occurs for a Subject ID you are expected to only send the changed records. Older records will be loaded from previously transferred files.

Advantages

-

New attributes are automatically added to the source.

-

The setup of mappings is made more straightforward, allowing for the selection of columns and eliminating the need to manually craft formulas for each attribute.

Disadvantages

-

Sending each record requires the inclusion of all attributes for that subject ID, restricting the option to selectively send specific attributes. For example, in the case of a change in an employee's job title, sending the update mandates including all other associated attributes for that employee.

Transactional Log

Transactional Logs must have the following requirements:

-

Allows for multiple rows per subject ID for each effective date.

-

Present data in attribute/value pairs.

-

Prefer camel case for attribute names; underscores are also acceptable. For example, JobName.

-

Include a full historical record for all subject IDs in the first file and for restatements.

-

Subsequent data loads should only include records that have undergone changes.

-

Include all common fields as outlined previously in this document.

This approach requires the data to be provided in a “tall” format, whereby we require a row for each attribute and value pair.

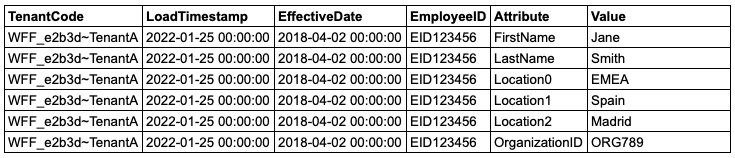

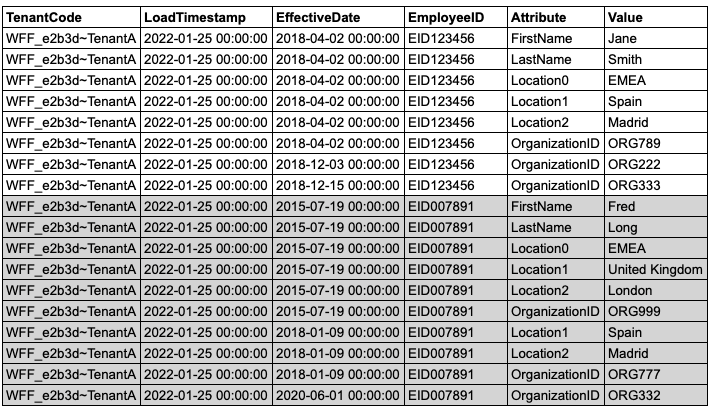

The following image shows a single Employee over multiple rows, one row for each attribute.

An initial full history file should be sent for the entire population.

When a net-new change or correction occurs for a Subject ID you are expected to only send the changed records. Older records will be loaded from previously transferred files.

Advantages

-

Partners can seamlessly add attributes to files without altering the file schema, offering flexibility compared to the wide format.

-

Changes are directly extracted from the log; the loader does not infer changes or determine the need for system event creation based on incoming data.

-

Different analytic tenants can have distinct schemas, allowing flexibility. For instance, one analytic tenant may send an attribute while another may not (mapping on the Administrating tenant is still necessary).

-

Particularly beneficial when data is stored in multiple dimension tables with varied effective date rules. For example, maintaining history for certain attribute changes (e.g., Employment_Status) while only storing the latest value for others (e.g., Location4).

-

Corrections become more straightforward to manage.

Disadvantages

-

Despite flexibility, each new attribute must still be manually mapped to Visier's data model.

-

Identifying unused and new attributes in files can be challenging.

-

Requires an extraction rule for every mapped attribute, adding complexity to the process.

CopyBusiness rule example

keepSuccessOnly(if (column("Attribute") != "Location0")noneelsecolumn("Value"))

Regular Event

When working with data, where each record is an occurrence of an event — anything that happens to a subject, such as interviews and hires, the data must be provided in a "wide" format, whereby a single row is provided per event along with attributes as columns.

Regular Events must have the following requirements:

- Each event should have a dedicated row.

- Subject ID, the parent subject of the event must be included as a column.

- Event date, the date when the event occurred, must be included as a column.

- Represent attributes of the event as columns.

Note: Start and Exit events are loaded like regular events. However, they appear as Conception and Termination events in the event stream, whereas other events appear as a Regular event.

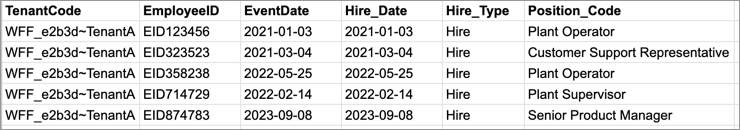

The following image shows hire events for employees over multiple rows, one row for each event.

File folder structure

Organize the ZIP file using the following file structure, with one folder per tenant.

File1.zip

- Administrating tenant folder: WFF_tenantCode

- Analytic tenant folder: WFF_tenantCode~123

- Filename1.csv

- Filename2.csv

- Analytic tenant folder: WFF_tenantCode~456

- Filename3.csv

- Filename4.csv

- Analytic tenant folder: WFF_tenantCode~123

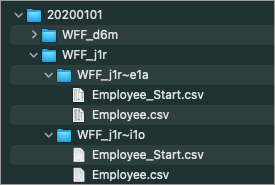

For example, the following image shows the correct file structure for an unzipped file.

For more information about file requirements, see Data File Guidelines.